What Did Humans Evolve to Eat?

What dietary adaptations characterize modern humans as a species, as distinct from our closest living relatives?

Not medical advice. All timelines are approximations.

Cows eat grass. Grizzly bears eat fish and berries. Cats eat whatever small animal they can catch. These are the foods each species is "meant" to consume. Cows can eat grain. Grizzlies can rummage through dumpsters. Cats can survive on vegetarian mush. However, the quality of an animal’s metabolic health depends on whether it’s consuming the foods it’s built for.

Each species has a mind-body adapted to seek out, consume, and extract nourishment from specific food sources. The anatomical and physiological traits that define a species result from long periods of adaptation to particular environments. Some animals are highly specialized for a narrow range of habitats and foods, while others are generalists. A species' digestive adaptations determine which foods it is best equipped to process—unlike cows, you and I cannot build muscle by chewing and swallowing grass.

Like many other mammals, humans can eat all kinds of stuff. We’ve clearly been doing just that, and it’s resulted in a metabolic health crisis. But what are human beings “meant” to eat? What types of foods did the evolutionary process sculpt our bodies to extract nourishment from most effectively? Understanding that question is key to understanding what types of foods will sustain your metabolic health.

People with strong opinions will tell you everything from “mostly plants” to “mostly meat.” As we’ll see, our natural history offers no singular answer, but there are key traits that provide a clear indication of diets that are metabolically sub-optimal. (Spoiler alert: humans are not herbivorous primates, and your body is not well-equipped for vegetarianism).

The genus Homo for well over two million years old. We evolved generalist capabilities that enabled humans to adapt to environments from the tropics to the Arctic, which sets us apart from our closest living relatives. Despite the adaptable, general-purpose nature of our species, specific lineages of modern humans have spent tens of thousands of years adapting to different food environments around the world. This has given rise to distinct metabolic profiles across humanity—each of us differs to some extent, down the enzymatic level, in terms of the types of foods our bodies can tolerate and extract nutrition from.

All of this will force us to carefully keep in mind two key things simultaneously:

There is no singular human diet to which all living people are “optimized.” Substantial metabolic differences exist between human lineages, meaning the “goodness” of a given diet depends on which lineage you belong to. These differences reflect very recent evolutionary changes within our species, which emerged on the scale of thousands to tens of thousands of years.

Despite significant metabolic differences between human lineages, there is a “common core” of adaptations that characterize our species—adaptations common to all people but distinguishing us from our closest living relatives and pre-modern hominin ancestors. These commonalities reflect a longer timescale of evolutionary change—the hundreds of thousands of years over which modern Homo sapiens arose and diverged from ancestral hominids.

This post will focus on adaptations that distinguish human beings as a species. These features are shared across human populations and reflect the timescales of evolutionary adaptation (100,000s of years) over which we diverged from other apes and archaic humans. In a separate post, we will focus on more recent evolutionary changes that produced metabolic differences between living human lineages. These differences determine why you and I might react differently to dairy, long-chain fatty acids, or other dietary components.

Before diving into the digestive and metabolic adaptations that characterize Homo sapiens as a species, we need to develop a sense of evolutionary time. How long does it take adaptations to emerge in response to changes in diet? How much time does it take to transform species of one dietary type into another? What’s possible or impossible on a timescale of 10,000 years vs. 200,000 years?

Human Phylogenetics & the Speed of Evolutionary Change

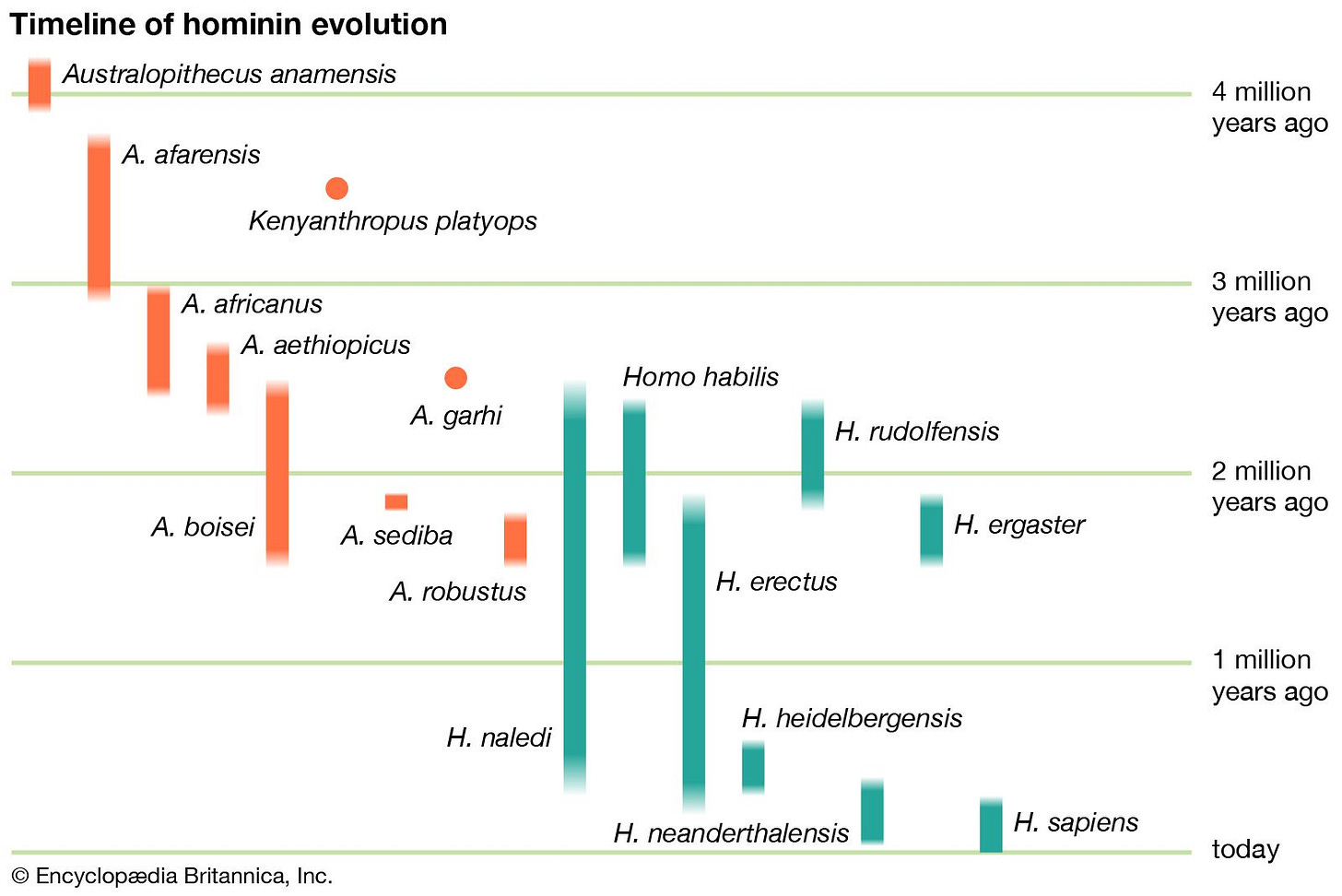

A brief, coarse-grained timeline of hominin evolution:

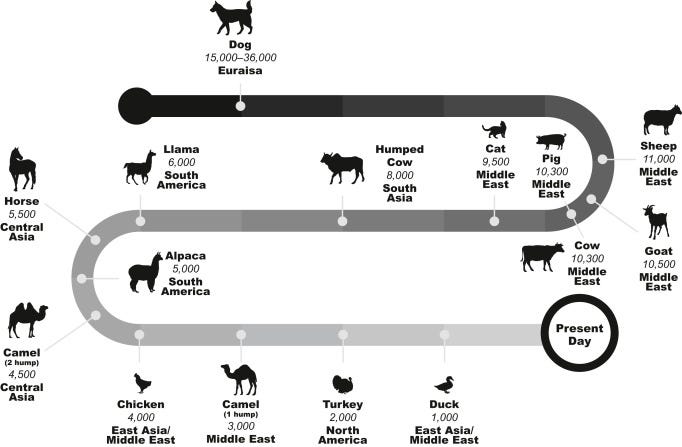

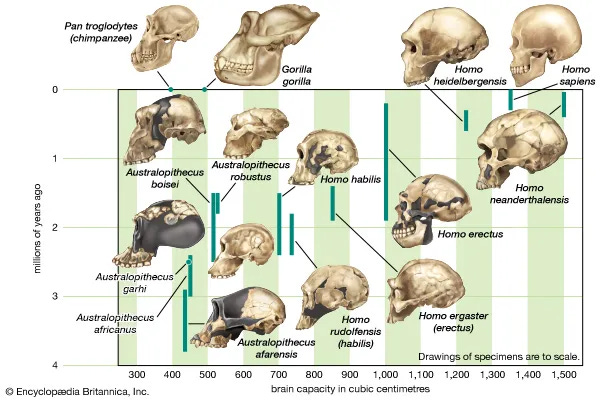

~6M years ago: Last common ancestor of humans and our closest living relatives, chimps and bonobos. Omnivorous but largely plant-based diet.

~4M years ago: Proto-human species like Australopithecus. Although diverged from other great apes, they retained an omnivorous but largely plant-based diet.

~2.5M years ago: The earliest human species (genus Homo) arose and diversified. They were still omnivorous, with more animal consumption over time. Hunter-gatherer strategies emerged, aided by the use of fire for cooking and specialized tools for hunting and scavenging meat.

~200K years ago: Anatomically modern humans emerged, living as bands of omnivorous hunter-gatherers with much more animal consumption compared to other apes and earlier hominins, with superior hunting and scavenging abilities.

~60-70K years ago: Modern Homo sapiens begins radiating around the world. As this happened, different human groups began adapting to separate food environments around the world.

~12K years ago: Agriculture is developed, eventually giving rise to large-scale societies. The direct descendants of farmers partially adapt to this novel sedentary lifestyle and food environment.

The Paleolithic encompasses the emergence of the genus Homo ~2.5M years ago, up to the development of agriculture around 10,000 BCE. Anatomically modern humans emerged within this period, roughly 200K years ago. As a species, we spent more than 20x the time living and dying as Paleolithic hunter-gatherers than any lineage has spent farming in the Neolithic era. And remember: only a subset of living humans are direct descendants of Neolithic farmers—a large proportion of humans living today are descended from lineages that never had a long tradition of sedentary, agricultural living.

Some questions about timing and the speed of evolution come to mind:

Is ~2.5M years (the approximate age of our genus) enough time to make us substantially different from our mostly plant-eating cousins, like Australopithecus and chimpanzees? (Spoiler: yes.)

Is ~12K years of farming enough time to “erase” the traces of our hunter-gatherer past and become fully adapted to a sedentary lifestyle and agricultural food environment? (Spoiler: no.)

If we spent >200K years as hunter-gatherers but no more than 12K years as agriculturalists, how much of our metabolism reflects our extended history as hunter-gatherers vs. more recent history living in sedentary, farming-dependent societies? (Spoiler: it depends strongly on genetics, but we all share digestive adaptations common to our pre-modern, pre-agricultural ancestors.)

Assuming an average generation time of 20 years, anatomically modern humans spent on the order of 10,000 generations of time as hunter-gatherers, but no more than about 600 generations as sedentary farmers (and only for a subset of humanity). How much evolutionary change is possible on these short timescales?

To learn more about paleoanthropology, try these M&M episodes:

M&M #160: Diet, Hunting, Culture and Evolution of Paleolithic Humans & Hunter Gatherers | Eugene Morin

M&M #10: Human Evolution, Neanderthals, Ancient DNA, Paleoanthropology & Human Diversity | John Hawks

Fast Evolutionary Change: What can change in 10-50K years?

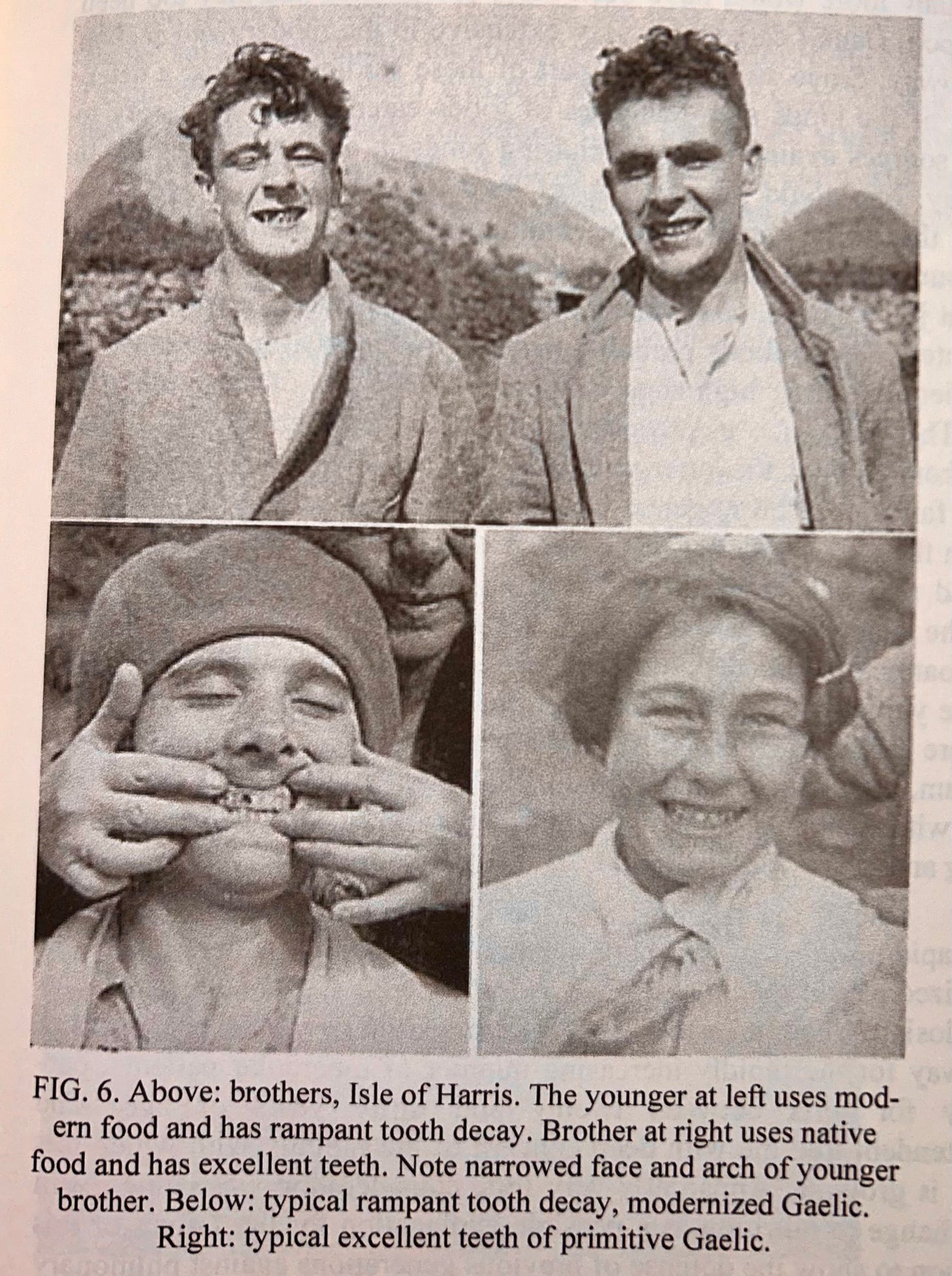

When there’s an evolutionary mismatch between an animal’s present and ancestral food environment, we tend to observe a diet-induced deterioration in metabolic health. The bigger the mismatch, the faster the animal will deteriorate. In the early days of industrialization (early 1900s), Weston Price observed astounding levels of physical degeneration in humans—rampant tooth decay, shorter stature, and greater vulnerability to infectious disease—when people transitioned from traditional to post-industrial diets. This often took place within a single generation and was seen in cultures found in every corner of the globe

In the presence of food the body is not well-equipped to process, physical degeneration can happen quickly (within a single generation). How quickly can an animal’s metabolism adapt to a novel food environment? One way to calibrate our intuition for this is to consider examples of the fastest-spreading dietary adaptations known in humans. This tells us how quickly specific adaptations can spread if they confer a strong survival advantage.

Lactase persistence (dairy tolerance) emerged shortly after the dawn of agriculture, probably no more than 10K years ago. It quickly spread through certain human populations (those with domesticated dairy animals)—even then, it was not able to spread completely. Thus, 10K years (roughly 500 generations) isn’t quite enough time for even a “very fast” dietary adaption to spread completely. That’s why lactase persistence is not universal, even within populations where it’s most common.

Lactase persistence is a single, genetically simple metabolic trait. What about more substantial metabolic transformations?

Agriculture and large-scale sedentary living have been around for no more than 12K years. Before that, pretty much every human in pre-history was a hunter-gatherer. Is 12K years enough time for evolution to largely erase “hunter-gatherer-ness”? We can look at examples of very rapid species evolution to get a sense of what’s possible in 12K years. The fastest changes come from domestication—when humans drive rapid evolutionary change in animals through deliberate, selective breeding

Consider domestic cats and dogs. We began domesticating cats and dogs no more than 10K and 40K years ago, respectively. Domestic cats are different in many ways from their wild counterparts, but they’re not that different. Even with the aid of human breeding, cats retain most of their wild instincts. They also retain their dietary needs as carnivores, as do dogs.

Over the past 40 years, we've been intensively bred dogs of all shapes, sizes, and temperaments. Starting with wolves, we created everything from greyhounds to corgis in much less time than our own species has existed. Although individual breeds differ in physical appearance and personality, they generally retain core features of their wild ancestors: social tendencies, a desire to hunt and kill small animals, etc.

Despite tens of thousands of years of intentional breeding, domestic dogs and cats retain their essential carnivore nature. Sure, they can survive on the manufactured plant slop they’re often fed today, but their bodies aren’t “meant” for it—there’s a mismatch between their present food environment and their biology, which is why more pets than ever suffer from metabolic syndrome. The metabolic health pandemic of our own species speaks to a similar mismatch.

Tens of thousands of years (hundreds of generations) is enough time for specific metabolic adaptations to arise and spread, but not enough time for them to become universal features of human lineages (let alone the entire species). Tens of thousands of years is not enough time to completely transform an animal’s metabolism so that it can maintain good metabolic health in a novel food environment. Thus, 12K years has not been enough time for humans to adapt to our modern, post-agricultural food environment.

Even if we weren’t inventing new processed foods and exposing ourselves to novel ingredients every business quarter—even if our food environment had remained perfectly stable since the dawn of agriculture—it is unlikely that even the direct descendants of the first Neolithic farmers would be “fully” adapted to the post-agricultural food environment, without a trace of their hunter-gatherer roots. (Recall again: only a subset of humanity belongs to this particular lineage).

What about longer timescales of evolutionary change? How long does it take to “totally transform” a species from one dietary type to another? Our genus arose over two million years ago and diverged from Australopithecus and great apes millions of years before that. Is that enough time for our dietary requirements to have strongly departed from the plant-heavy diets of our evolutionary cousins?

Slow Evolutionary Change: How much time is required for substantial metabolic transformation?

All animals retain traces of their evolutionary past. Some of these extend very deep in time, all the way back to the last universal common ancestor. Every known organism shares biological features related to core cellular functions like DNA replication, protein synthesis, and so on. Nonetheless, it’s clearly not useful to study E. coli as a guide for what to buy at the grocery store. The last common ancestor of bacteria and humans is “too old” to be helpful here.

But how do we determine how old “too old” actually is when it comes to thinking about the human diet in a comparative, cross-species fashion? Does it make sense to look to our closest living relatives as a guide to healthy living?

Humans and chimps are separated by about 6 million years. Both species are nested within a larger branch of the primate family tree that includes all Old World primates, many of which were frugivorous (fruit-eating). We retain traces of this deep ancestry in some of our hallmark primate adaptations: three-color vision and lack of endogenous vitamin C production. Ancestral primate species gained and lost those traits, respectively, tens of millions of years ago, driven by a dietary environment that required distinguishing reds from greens, with plentiful exogenous vitamin C.

Given that we share these ancient diet-related traits with our primate cousins, should we look to chimps or other living primates as a guide for understanding what humans evolved to eat? Chimps eat a largely plant-based diet, with plenty of fruit and vegetables and modest intake of animal fat and protein. Come to think of it, that sounds a lot like the dietary recommendations made by the mainstream medical establishment. Doesn’t it make sense to look to our closest living relatives as a guide to healthy, natural eating?

No. This is a bad strategy.

Several million years (and potentially even hundreds of thousands of years) is more than enough time for substantial metabolic changes to evolve. As we’ll unpack in more detail below, there is clear evidence that for the ~2.5M years our genus has existed, ancestral humans came to rely more and more on animal foods over time. Although we’ve retained our identity as fruit-loving omnivores, Homo sapiens evolved a variety of anatomic and digestive adaptations that indicate a clear shift away from a predominantly plant-based diet and towards an omnivorous one—a diet in which animal meat featured much, much more heavily than it did for our primate cousins.

Summary: How to think about evolutionary timescales when studying the dietary needs of humans as a species

~10K years: Enough time for individual metabolic adaptations to spread widely, but not completely. This will only happen with strong selective pressure and is not enough time to fully “switch” an animal away from lifestyle traits that persisted for hundreds of thousands of years (or more). This is the time frame of the modern era, since the dawn of agriculture.

~10K-70K years: Enough time for distinct lineages of a species to emerge and adapt to different food environments. It is not enough time to erase all shared aspects of metabolism that characterize the whole species relative to others. This is the time frame over which Homo sapiens spread across the globe.

~70K-2.5M years: Enough time for substantial digestive evolutionary change to emerge and stabilize within a species, such that its dietary needs are no longer directly comparable to its deeper evolutionary ancestors.

2.5M+ years: Species sharing a common ancestor several millions of years ago may retain many similarities, but there is no guarantee their dietary requirements or metabolic needs will be comparable.

The focus of the rest of this post will be on specific anatomic and digestive adaptations that characterize modern humans as a species. This roughly corresponds to the 2.5M years since the genus Homo emerged, including the 200,000 years of time anatomically modern humans have existed (largely in Africa), prior to our radiation across the globe. This is the timescale over which the metabolic and digestive adaptations common to all living humans evolved.

The species-wide adaptations we explore in this post can inform general, high-level dietary choices, such as:

Are vegetarian or vegan whole-food diets likely to support metabolic health?

Did Homo sapiens ever subsist on a largely carnivorous diet, or has our species always been highly omnivorous?

What types of diets reliably lead to a rapid decline in human health?

Why do animal vs. plant whole foods differ in their protein and nutrient bioavailability?

The Human Species: Digestive adaptations that characterize Homo sapiens

What “dietary type” are human beings?

If you wish to think in ideological terms, join a tribe and ask them what to believe. The Vegan Diet tribe says Thou Shalt Not Eat Animals. The Carnivore Tribe will tell you to eat only animals, not plants. Everyone has their convictions. For some tribes, these have explicitly religious origins (e.g. Seventh Day Adventists’ promotion of vegetarianism). For others, articles of faith are camouflaged as ‘scientific’ knowledge.

Here we take a different approach, using comparative zoology and phylogenetics to triangulate what the human body is adapted to. Our intent is to avoid cherry-picking from the empirical record to suit any pre-conceived moral attitude we might have. (In my experience, most people proceed in the opposite fashion—they start from axioms based on raw moral intuition, then warp their interpretation of empirical data to fit prior moral beliefs.)

We will briefly cover deep hominin evolution, looking at the dietary changes after Australopithecus and the earliest species of Homo emerged. What did the diets of the earliest human species look like over a million years ago, and how did they change with the emergence of anatomically modern Homo sapiens 200,000 years ago? After that, we will compare the digestive system of modern humans to other animals, starting at the head and working our way through the gut.

For more details on the evolution of the human diet, read these academic papers:

Paper 1: Effects of Evolution, Ecology, and Economy on Human Diet: Insights from Hunter-Gatherers and Other Small-Scale Societies | Pontzer & Wood (2021)

Paper 2: Evolutionary basis for the human diet: consequences for human health | Andrews & Johnson (2019)

Deep human evolution: Diets of early humans compared to Great Apes

Unless otherwise noted, much of this section on our deeper evolutionary history will draw from this excellent review of the evolution of the human diet.

Chimps and other great apes eat a largely plant-based diet, with the vast majority of energy coming from leaves or fruit. Chimps and bonobos get 60-70% of calories from fruits and ~20% from leaves. All great apes eat some insects, but chimps and bonobos eat more animal foods than others. Rates of animal consumption are highly variable across communities, but they will hunt and eat smaller primates (chimps) or small ungulates (bonobos) when they can. Although meat is greatly prized, they’re primarily plant-eaters.

The evolutionary branch leading to modern humans began diverging from this plant-heavy diet before Homo sapiens arose, which is why comparing ourselves to modern great apes is a poor guide for healthy eating. The earliest hominids, which arose and lived in the ballpark of 2-5 million years ago (e.g. Ardipithecus, Australopithecus), also seem to have had a largely plant-based diet.

Research Question: What dietary and metabolic adaptations define modern humans as a species?

Research Question: How did the diet of anatomically modern humans compare to more archaic species?

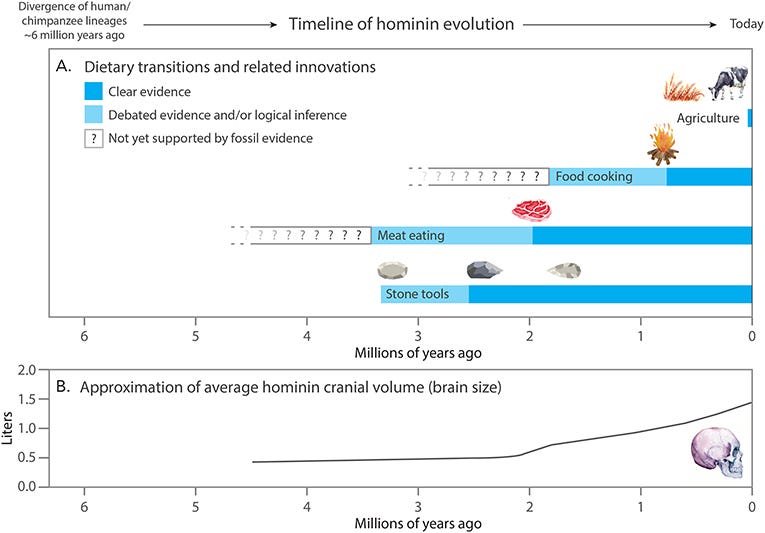

Around 2.5 million years ago, the fossil record shows evidence of more varied stone tools and cut-marked bones, suggesting growing reliance on meat. This was the time of “late Australopithecus” and “early Homo.” In other words, around the origins of our genus (but before Homo sapiens), the fossil record indicates a shift away from the heavily plant-focused foraging strategies of earlier hominins and great apes and the beginning of a more hunting and gathering style.

The control of fire for cooking was a crucial innovation with major dietary and metabolic implications. This occurred roughly 500,000 years ago and could be over a million years old. Humans have been regularly consuming cooked food for at least 300,000-400,000 years, and no known human society subsists solely on non-cooked food.

Cooking makes foods easier to digest and increases the energy gained per gram (e.g., by breaking down plant components that we lack the enzymatic ability to digest). Adaptations of the human digestive system reflect the hundreds of thousands of years we’ve been cooking (see below). This is why modern “raw foodists” tend to be underweight and experience problems like menstrual irregularities and fatigue. If humans were adapted to getting a substantial portion of our nutrition from raw plant foods, then we would have physiological adaptations enabling our bodies to extract and absorb nutrition from plant foods more efficiently.

The period in which cooking became common overlaps with the origins of a more serious hunting focus. By ~600,000 years ago, Paleolithic species of Homo were hunting large animals like horses and even elephants. By the time of early Homo, there was a shift to a dietary strategy distinct from our more distant relatives, one that included cooking and much more meat consumption.

Research Question: How have humans adapted to cooking food?

Research Question: What dietary and metabolic adaptations characterize humans as a species?

How much more meat consumption, exactly? It’s difficult to discern a precise answer to this from the archaeological record, which preserves only a tiny fraction of the past. The fact that early Homo was capable of acquiring large game animals like horses and elephants frequently enough for archaeologists to detect examples suggests much more substantial levels of meat consumption compared to our closest living relatives or more distant ancestors.

Some researchers think that early Homo may have been largely carnivorous for a period, although this appears uncertain. Whether or not early species like Homo erectus or pre-modern Homo sapiens were ever truly carnivorous, they were certainly eating much more animal meat than their predecessors. Our species was never largely herbivorous like chimpanzees. Even if our species passed through a phase with especially high meat consumption, we’ve likely been opportunistic eaters, using our brains to cleverly take advantage of any possible food source. That being said, there is evidence that at least some human populations, such as the Clovis people of North America (the ancestors of Native Americans), ate a largely carnivorous diet composed mainly of mammoths and other large mammals. This was likely true of some human populations in the Americas and Eurasia near the end of the last Ice Age.

Summary timeline of human & ape dietary change (approximate):

6M years ago: Last common ancestor of modern humans, chimps, bonobos.

6M - 2.5M years ago: Early hominins ate largely plant-based diets, likely with some amount of insect or small animal consumption, similar to present-day chimps and bonobos.

2.5M - 200K years ago: Early members of Homo engaged in hunter-gatherer lifestyles that included cooking, with more animal and less plant consumption than ancestral hominins. This likely became more and more true leading up to the origin of anatomically modern Homo sapiens, as evidence suggests more hunting and scavenging of animal meat.

200K years ago: Our oldest “fully human” ancestors inherited the hunter-gatherer lifestyles and dietary adaptations of earlier Homo. Although omnivorous, their diets were substantially more animal-based than predecessors, with cooking-enabled digestive adaptions and sophisticated strategies for group hunting of large game animals.

15K - 10K: Farming, pastoralism, and other practices emerged as (some) human lineages became more sedentary, facilitating larger and larger group living.

<300 years ago: Industrialization begins, giving rise to the ultra-processed food environment of the present day.

Below, we will turn our focus to specific adaptations that characterize modern humans.

Comparative zoology: skull, jaws & teeth

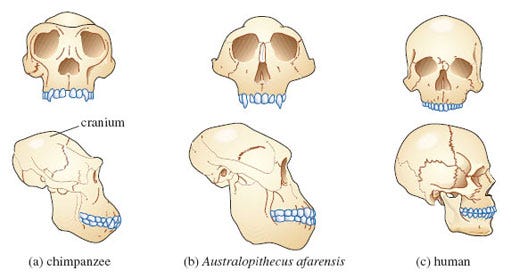

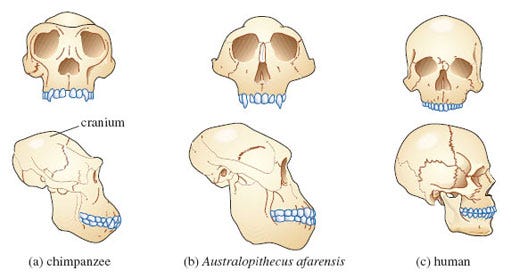

The skulls of early hominins and our closest living relatives differ markedly from that of modern Homo sapiens. Cranial volume often gets the most attention—our brains are bigger, and dietary shifts as the genus Homo emerged likely played some role in the evolution of our big brains. But our skulls differ considerably in other ways from those of species like chimpanzees and predecessors of Homo that consumed plant-heavy diets.

Chimps and archaic species that lived prior to the emergence of Homo had craniofacial adaptations that supported plant-heavy diets: thick enamel, powerful jaws, and large molars lacking well-developed shearing crests—traits that support lots of daily mastication, including crushing of hard food items. If you just watch species like chimps and gorillas, you’ll notice that they spend a lot of time chewing every day. Our craniofacial anatomy isn’t built to physically support that level of mastication.

If you want more detail on how the anatomy of Homo changed over time, read this. In short, by the time Homo erectus emerged roughly 2M years ago, there was already substantial change in craniofacial morphology, including larger cranial volume and reductions in the size of teeth and jaws, compared to earlier hominins. Many of these changes more or less continued as time went on and modern Homo sapiens arose. The overall reduction in jaw size is likely tied to our increased use of fire. Cooked food has higher energy density and is more easily digested, allowing us to consume a much lower volume of food compared to our closest primate relatives.

Bottom line: adaptations from the neck up clearly indicate that our species is not adapted to a raw, plant-based diet. Despite what some vegans might claim, we are not built like herbivores, or even like primates that eat mostly plants. If we were, we’d have larger jaws and different teeth. At the same time, we are also clearly not strict carnivores, which is why our teeth and jaws don’t look more like a cat or dog. Human craniofacial anatomy indicates a long history with a diverse, omnivorous diet, but with much lower plant content compared to other primates. The conclusion is reinforced by other features of our digestive system.

Comparative zoology: stomach

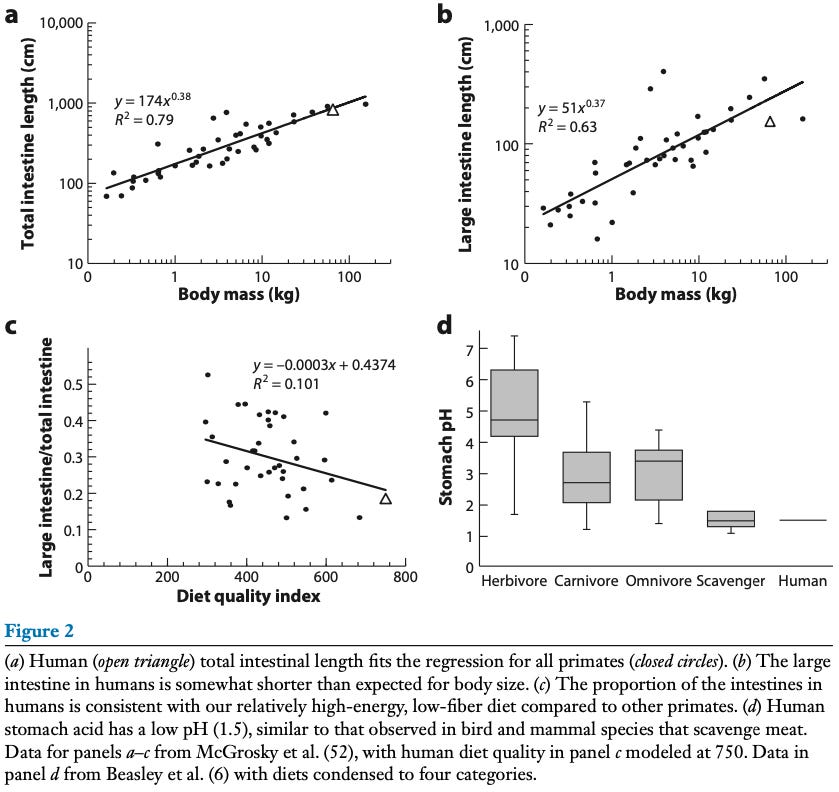

One feature of digestive physiology that clearly distinguishes animals by “dietary type” is stomach pH. Animals that eat meat have much more acidic stomachs (lower pH) than herbivores. This is true whether they eat only meat (carnivores) or a mix of animals and plants (omnivores). Meat-eating scavengers have the most acidic stomachs of all—they have to digest rotting meat, which they often scavenge from carnivorous predators.

How does human stomach pH compare to other animals? The answer may surprise some:

The human stomach is highly acidic, in line with what we see for mammals and birds that scavenge meat. Our stomach is more acidic than either omnivores or carnivores and much more acidic than herbivores. With a pH of 1.5, human stomach acidity is closer to that of a turkey vulture than it is to a cat or especially a cow. High stomach acidity (low pH) acts as a microbial filter, restricting the entry of microbes into the GI tract. This has obvious adaptive value for scavengers, as they feed on rotting meat that may contain potentially harmful microbes.

But wait… are we really to believe humans may have once commonly consumed, or even specialized, in scavenging meat? Humans are clearly omnivorous, with a zoologically unique ability to cook food. I know people who can’t even deal with fresh sushi. Are we really to believe that early humans scavenged and ate rotting meat?

After talking to anthropologist Eugene Morin on M&M #160, this may simply reflect our modern bias:

Rotten meat is something that Westerners think is not edible. We found tons of evidence that it was being eaten and sometimes preferred by natives in the rotten state, because it makes digestion easier and some prefer the taste. And so, rotten meat was considered a ‘no-no,’ and then by doing some ethno-historical research, you discover that this is a cultural construct. Actually, rotten meat is palatable, and if you’re used to eating rotten meat you will be able to metabolize it in a reliable way and you will be fine. And when I say, ‘rotten meat,’ I mean meat full of maggots—like really, really transformed, really rotten. We have excellent examples of this.

He then tells an interesting historical story about a European expedition to the Arctic, during which an Eskimo man excitedly brought a rotting seal carcass back to the ship, offended that the Europeans did not share his enthusiasm.

Stomach pH is a pretty telling trait: if humans were not scavenging meat for an extended period of time, it’s difficult to explain why we have the stomach acidity of a vulture. Even carnivorous animals that eat freshly killed meat, like predatory cats, have less acidic stomachs than ours. Perhaps by using their voices to coordinate group behavior while wielding tools like spears and torches, early humans innovated a new strategy for obtaining nutrient-dense meals: stealing from predators. (There’s an interesting theory of language evolution based on the idea that early humans were, “power scavengers”).

Bottom line: the aversion we often see among modern people to raw and rotten meat today is likely a cultural artifact. As Dr. Morin told me, scavenging meat was probably a food procurement strategy for much of human history.

Comparative zoology: small & large intestines

Total GI tract length is within the range of other primates, consistent with omnivory. However, other features of our gut differ in ways that further indicate a move away from the mostly plant-based diets of our primate cousins:

Shorter large intestines for our body size, likely reflecting reduced reliance on fiber fermentation and digestion (another indicator of movement away from a plant-based diet).

A lower large intestine / total intestine length ratio, consistent with a high energy density diet that likely reflects our ability to cook food.

Collectively, all of these digestive adaptations indicate that Homo sapiens did not evolve to eat a mostly plant-based diet resembling that of our closest living relatives. But to what extent were Paleolithic humans omnivorous? We clearly had adaptations that suggest a lot of meat scavenging, and early humans were known to be butchering large game animals. Were we largely carnivorous for an extended period? This is conceivable, but it’s hard to say for certain. Evidence suggests that Paleolithic humans were likely omnivorous, even if meat consumption was considerable:

“Some have interpreted these morphological changes and the archeological evidence for large-game hunting as evidence that Paleolithic hominins were hypercarnivores, obtaining more than 70% of their daily calories from animals. However, the sparse and uneven nature of the archeological record makes discerning the percentage contribution of animal and plant foods in the Paleolithic diet notoriously challenging. Butchered bones and stone hunting tools are more likely to be preserved in the fossil and archeological records than are the remains of plant foods or wooden tools used to harvest them. Several lines of evidence suggest a more even contribution of animal and plant foods to the Paleolithic diet, with considerable variation both geographically and temporally.”

[Read this paper for more detail.]

Homo sapiens evolved a large variety of traits that distinguish us from other primates and archaic humans, beyond those directly tied to digestion. Many coordinated changes in the brain and body reflect the unique evolutionary path that enabled humans to populate the entire world and eventually create civilization as we know it. Surveying every anatomic and physiological adaptation of our species is beyond the scope of this article, but changes in diet and metabolism are likely intimately bound up with the evolution of human brain size and behavioral complexity.

Beyond looking at the human body in comparison with other species, we can also look to the anthropological record. Agriculture and sedentary living arose just 12K years ago. Early Homo may have been hunting and stealing meat in Africa for hundreds of thousands (or millions) of years, but our hunter-gatherer ancestors began their African exodus over 50,000 years ago. For tens of thousands of years prior to the dawn of agriculture, hunter-gatherers lived and adapted to habitats around the world. On average, what do we know about the diets of human hunter-gatherers who lived closer to the present but never in sedentary, agricultural societies?

Comparative Ethnography: Animal & Plant Foods Across Human Hunter-Gatherers

Different human groups and cultures have clearly depended on a wide range of diets throughout the world. The !Kung foragers of Africa, Eskimos of the Arctic tundra, and tribes of the Amazon all ate different foods, with distinct mixtures of plants and animals. But are there any systematic trends in the consumption of plant vs. animal foods across as many traditional cultures as we have data for?

The short answer is: yes. The chart below plots data for 263 traditional human populations as a function of absolute latitude (distance from the equator). The y-axis is the percentage of diet that’s estimated to come from animals—dots lower down are cultures eating more plant-based diets, and those higher up are more animal-based.

There is a clear relationship between diet and latitude: the further that human groups live from the equator, the more heavily they tend to rely on animal foods. The vast majority of hunter-gatherers eat a healthy mix of animal and plant foods. On average, the overall bias is somewhat in the direction of animal foods, with this bias growing strong with distance from the equator. By eye, you can see this general breakdown clearly, in terms of the animal:plant food ratio:

“Largely plant-based” (30:70 or less): These cultures are rare (just a handful), mostly concentrated near the equator.

“Balanced omnivory” (40:60 to 60:40): There are a lot of these, but not at a great distance from the equator.

“Largely animal-based” (70:30 or more): There are also a lot of these, especially away from the equator

There seems to be an overall bias toward animal-based diets. Many cultures consume a good balance of plant and animal foods. There are also many cultures with a clear animal-food bias, which is more common with distance from the equator. Very few traditional, pre-agricultural groups eat a strongly plant-based diet.

This dietary history explains the various adaptations of the human body described above and why rapid physical degeneration has been consistently seen throughout the world whenever people transition from traditional to post-industrial diets. In the early 1900s, Weston Price surveyed humans all over the globe and observed rapid physical degeneration in all groups once modern, post-industrial foods were adopted. This seemed to be tied to the presence of certain minerals and fat-soluble vitamins found mostly in animal products:

“It is significant that I have as yet found no group that was building and maintaining good bodies exclusively on plant foods. A number of groups are endeavoring to do so with marked evidence of failure. The variety of animal foods available has vary widely in some groups, and been limited among others.”

—Weston Price, Nutrition and Physical Degeneration (1939)

Bioavailability of Plant vs. Animal Foods

If you compare the bioavailability of nutrients between plant and animal foods, what you will generally find (with certain exceptions) is that nutrients from animal sources tend to be more bioavailable to us than those from plant sources.

Why would this be? Hopefully, at this point, the evolutionary perspective makes the answer obvious: this is what our species if adapted to. Cows are very well-equipped to filter toxins and extract nutrients from grass. The bioavailability of nutrients from grass is high for cows and low for us. Conversely, we are better adapted to extract things like amino acids from animal proteins compared to plant proteins.

As a general rule, this applies to the bioavailability of other nutrients as well. For example, calcium tends to be more bioavailable from animal milk than it is from plant-based products, and animal foods are the almost exclusive natural sources of certain dietary micronutrients, at least in highly bioavailable form. This is why strict vegetarian or vegan diets can lead to malnutrition and metabolic issues, especially when consumed by children. It is nearly impossible for your body to obtain all the nutrition it needs from a strictly plant-based whole food diet, which is why individuals who on those diets need to be very diligent about supplementation. None of this make sense if humans evolved on a largely plant-based diet.

Human Metabolic Diversity & Recent Evolution

In this article, I cover more recent evolutionary change—differences in dietary fat metabolism, which displays strong variation among people living today. Despite the commonalities all living humans share compared to other animal species, there is substantial metabolic variation between living people. This variation dictates your body’s ability to effectively process foods ranging from dairy products to grains and starches, fatty acids, and more.

For a taste of the fascinating biology of human metabolic diversity, try scavenging from these resources:

Podcast: Evolution & Genetics of Human Diet, Metabolism, Disease Risk, Skin Color and Origins of Modern Europeans | Eske Willerslev

Podcast: Ancestral vs. Modern Human Diets, Seed Oils, Inflammation, Fat Oxidation, Influence of Industry on Food Science | Steven Rofrano

Paper: Selection in Europeans on Fatty Acid Desaturases Associated with Dietary Changes

Research Question: How does starch and grain metabolism vary among people?

Thank you for this brilliant and thorough discussion! I'm a recent advocate of keto and carnivore, and feel blessed to have been enlightened on the right path! if only ore people could be exposed to this info!

This is directed to the author of this post. I'm curious if you've read nutrition and physical degeneration by Dr. Price. My holistic dentist has a copy on the bookshelf in his waiting room and I was skimming through it the other day. I actually found it quite interesting that many of the villages he investigated that had excellent dental health were eating agricultural not hunter-gatherer diets. For example, the citizens of a village in Switzerland at the time were largely subsisting on rye bread and cows milk. The author noted that they eat meat about once a week. They also weren't eating many vegetables or fruit much of the year because of the climate. I think it's notable that they chose not to eat meat more frequently even though there was likely plenty of game available in their region.